Loading & Queriying

large datasets

Introducing Shapelets, a platform for data scientists that allows you to digest and manipulate large datasets in the most efficient way.

In our recent Livestream, we focused on data management and how Shapelets stands out compared to other tools. You can watch the live demonstration in the video above.

Data Ingestion

In our recent Livestream, we focused on data management and how Shapelets stands out compared to other tools.You can watch the live demonstration in the video above.

Introducing Shapelets, a platform for data scientists that allows you to digest and manipulate large datasets in the most efficient way.

Loading & Queriying large datasets - Contents list

Data Ingestion: Loading datasets

Shapelets from a Jupiter Notebook

In our first example, we demonstrated Shapelets’ outstanding ingestion capabilities by using the New York Taxi dataset from 2009, which is 5.3 GB in size. To put this into perspective, a month’s worth of data is approximately 400 MB. We compared the computing time of a 400 MB dataset using Pandas and Polars:

- Pandas took 15 seconds to load the dataset and calculate the mean number of passengers.

- While Polars took 12 seconds.

- With Shapelets Data Apps, we were able to complete the same calculation in just 3 seconds.

We then applied the same comparison to the 5.3 GB dataset, and Pandas and Polars were unable to compute it due to the file size. On the other hand, our Shapelets API returned a computation time of 9.72 seconds, even faster than the other libraries for the smaller file. This shows a significant improvement in terms of time and resources.

Shapelets outperforms the other tools in terms of the number of resources used, as well as the time it takes to load and compute a dataset.

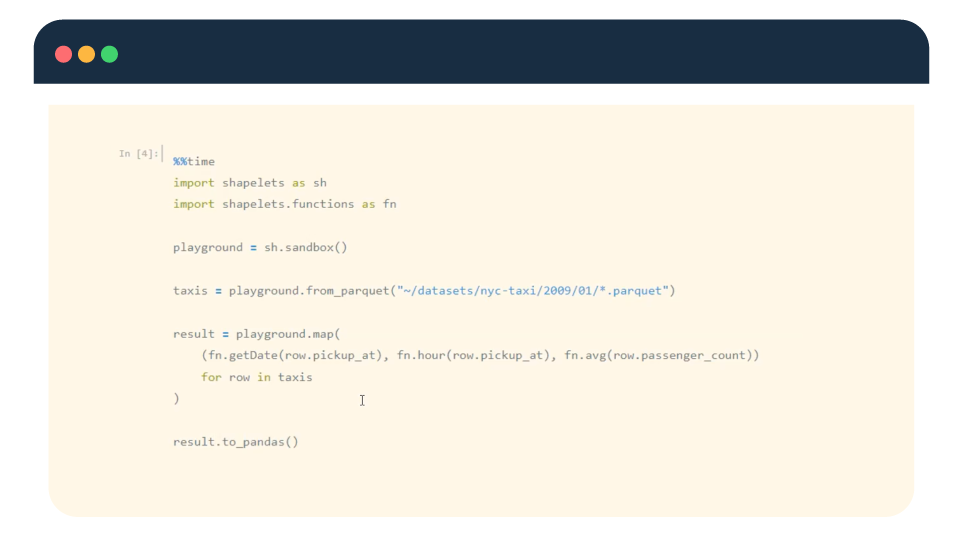

Even if the syntax is different from the three libraries, it is made to be intuitive for Data Scientists. For example, with the Shapelets API, we created a sandbox (where we will be able to query the data) for the same use case. We were able to mix and match any dataset in the organization (CSV, parquet, arrow), and then we easily queried the data thanks to an easy and intuitive expression. To make our syntax comprehensible for everyone, we considered the insights of our customers.

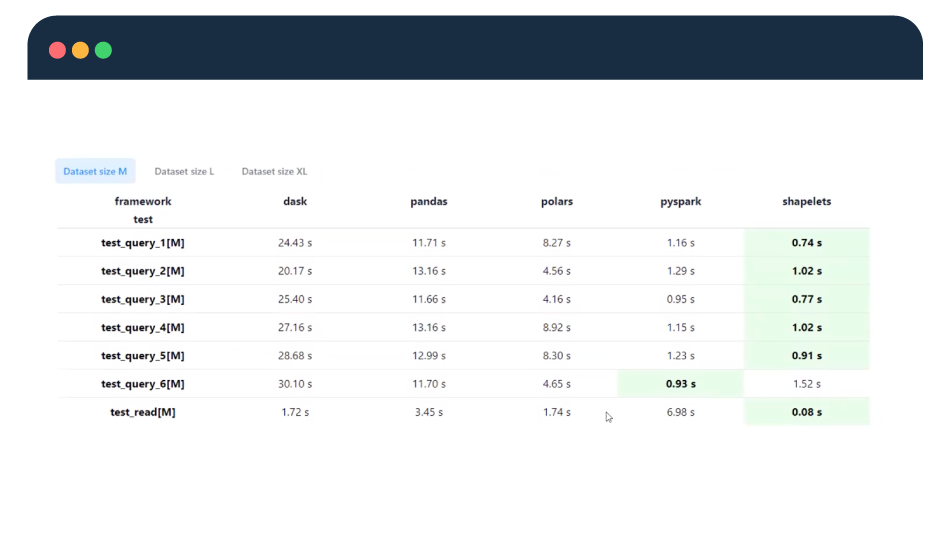

In terms of performance, we have been running some studies (formal test environment) on the New York taxis dataset of different sizes, queries, and libraries. In almost all cases, for medium sizes files, Shapelets API was faster than the rest and vastly different from the results from Pandas and Dask.

Most libraries were unable to compute it for larger files, with no significant difference between Spark and Shapelets.

Because we want to be the most competitive data management library, we’re working hard to improve our performance on large files.

Shapelets Sandbox playground

In this example, we wanted to explore Sandbox, and the relationships created along the line.

Firstly, Sandbox generates a unified execution plan for your data regardless of its type. For example, we can quickly and clearly return the format of the data and the rows in the datasets, as well as return the schema and select a region of the data. Our free examples include a wide range of functions.

The playground enables you to perform transformations on large files. The key concept of the playground is that it enables you to perform transformations on data in real-time, with computation occurring only when the results are produced.

This means that if we create a new transformation from the previous one, we chain all of the transformations together, resulting in a very efficient computation.

Question about Shapelets

1

What would be the first steps to start working with the data ingestion feature?

“First, Shapelets can be found on Pypi, where you can find all of the feature’s instructions, as well as a Docker image. Shapelets are currently available”

2

What can we expect from the next releases?

“We have been working on adding data streaming capabilities to the platform for the next release of the product.“

If you have sensors in an industrial or medical environment, you will soon be able to use Shapelets to collect all data and store it in a safe, very secure manner, which will be directly available for you to use.

Clément Mercier

Data Scientist Intern

Clément Mercier originally received his Bachelor’s Degree in Finance from Hult International Business school in Boston and is currently finishing his Master’s Degree in Big Data at IE school of Technology.

Clément has good international experience working with startups and big corporations such as the Zinneken’s Group, MediateTech in Boston, and Nestlé in Switzerland.