What is RAG?

Retrieval-Augmented Generation

29 October 2024 | 6 minutes

Do you prefer to listen to it? Just hit play!

GenAI | The key to empowering and transforming your business

In today’s business environment, quick and accurate access to relevant information can make the difference in competitiveness and decision-making. Generative Artificial Intelligence (GenAI) has emerged as one of the most revolutionary technologies of the past decade, transforming industries and redefining the interaction between humans and machines.

This article delves deeply into what GenAI is and how it interrelates with Large Language Models (LLM), Retrieval-Augmented Generation (RAG), and vector databases.

| What is Generative Artificial Intelligence?

Generative Artificial Intelligence refers to a type of AI capable of creating new and original content from existing data. Unlike traditional AI systems, which are limited to analyzing and processing information, GenAI can generate text, images, music, and other types of content that mimic human creativity. This capability is based on advanced machine learning models that learn patterns and structures from large volumes of data.

The combination of Generative Artificial Intelligence with LLM, RAG, and vector databases creates a powerful ecosystem for the creation and management of intelligent content:

- Intelligent Content Generation: LLMs generate coherent and relevant text based on learned patterns.

- Specific Information Retrieval: RAG complements this generation by extracting precise data from vector databases.

- Optimization and Scalability: Vector databases ensure that the necessary information is available quickly and efficiently.

- Continuous Updating: This system allows for the incorporation of new knowledge and data without the need to completely retrain the models, thus maintaining the relevance and accuracy of the generated content.

LLMs and their challenges

Large Language Models (LLMs) are the core of GenAI in natural language processing. These models are trained with vast amounts of text and are capable of understanding and generating human language in a coherent and contextually relevant manner.

Large-scale language models are deep neural networks designed to autonomously process and generate text. They have been widely used to automate assistance in various sectors, such as legal or healthcare, where generating detailed and contextualized responses is key to improving user experience.

| However, traditional LLMs have a critical limitation

These models cannot directly access up-to-date data, as they rely on the datasets used during their training. This limitation means that if information changes, the model cannot reflect these changes unless it is retrained, which is a costly and complex process.

Retrieval-Augmented Generation (RAG) solves this problem by integrating information retrieval modules that allow the LLM to access dynamic data in real time. This drastically enhances the models’ ability to generate relevant and accurate responses. For example, in a legal query, a RAG system can access recent jurisprudence that was not available at the time of the model’s training, thereby ensuring a precise and up-to-date response.

Currently, new LLMs are being developed to handle larger inputs, with the drawback of increased computational costs and practical limitations. As the context window increases, costs rise exponentially.

This can be confirmed in the following comparative graph of the main LLMs from 2023 and what has occurred so far in 2024:

RAG + Vector Database

Retrieval-Augmented Generation (RAG) is a technique that combines natural language generation with the retrieval of relevant information from a vector database. This combination enhances the precision and relevance of the responses generated by language models.

| But… What is a vector database?

Vector databases like Shapelets VectorDB work by transforming documents into vectors or “embeddings.” Embeddings are used to convert elements such as words, phrases, images, or any type of data into numerical vectors that capture the essential characteristics and semantic relationships between them.

These embeddings are stored in an optimized manner and are used to perform fast and accurate searches. By retrieving these vectors, the LLM can better contextualize the information and generate coherent and specific responses tailored to the user’s needs.

Semantic search allows the system to understand the context and intent behind a query, retrieving relevant information even if the exact words are not used.

Shapelets VectorDB also facilitates semantic search, which is much more powerful than traditional keyword-based searches. Semantic search allows understanding the context and intent behind a query, retrieving relevant information even if the exact same words are not used. This capability is fundamental to achieving more precise and useful information retrieval.

RAG: How does it work?

A RAG system is an innovative architecture in the field of artificial intelligence and natural language processing. Its main objective is to enhance the ability of generative models to provide more accurate and contextually relevant responses by combining text generation with information retrieval mechanisms.

This means that instead of returning a list of relevant documents, the model directly generates a response enriched with the retrieved information, making the process more efficient and the result more useful for the end user. This reduces operational costs and allows companies to stay up-to-date with rapidly changing information.

To illustrate how RAG works, consider the case of a data scientist tasked with analyzing large volumes of information from different sources to improve decision-making in their organization. The main challenges they face include:

• Identifying relevant information within a vast dataset.

• Updating data in real time.

• Reducing infrastructure costs.

The process begins with a natural language query, which is sent to the retrieval module, where RAG retrieves relevant information and documents from the Shapelets VectorDB vector database.

This stage is crucial for obtaining updated or specific context that may be relevant to answering the user’s question. It can be considered a phase of augmented retrieval, where the system tries to complement the information with precise data.

Once the context from the documents is retrieved, it is sent to the LLM after passing through the Apply phase. Here, the LLM is used both to process the original question and the retrieved information. The model applies its natural language understanding and generation capabilities to craft a response that is coherent and detailed.

This model has advanced language comprehension skills and is used to create a response by combining the retrieved information with its general knowledge.

Challenges of RAG and the role of Shapelets VectorDB

| Main challenges of RAG

Despite the significant advantages that RAG offers in improving LLM responses, there are several key challenges that need to be addressed to ensure its effectiveness:

• Computational Overhead: RAG involves continuous retrieval and processing of external information, which can lead to latency issues, especially in large-scale queries or real-time applications.

• Data Integration Complexity: Managing and integrating multiple data sources for retrieval requires efficient handling and synchronization, which can be complex.

• Quality and Relevance of Information: The effectiveness of RAG depends on the quality of external sources. Any inconsistency or outdated information directly affects the final result.

• Data Exposure Security: Connecting to multiple external sources can risk sensitive information if adequate controls are not in place, exposing confidential data and compromising information security.

| Shapelets Vector DB’s solution to RAG challenges

Shapelets Vector DB addresses these challenges by providing a highly optimized solution for data retrieval and management. Its architecture is designed to handle large volumes of vector data efficiently, minimizing latency issues through the optimization of ingestion, indexing, and retrieval processes.

Additionally, Shapelets Vector DB improves the quality of retrieved information by supporting semantic search capabilities, which allow for selecting more relevant and contextually appropriate data. This helps filter out noise and focus on the most pertinent information, ensuring that the RAG system is both accurate and efficient.

In terms of security, Shapelets Vector DB implements strict controls and confidential data management mechanisms, including robust authentication, encryption, and granular access policies, to ensure that only authorized users can access critical information, thereby guaranteeing the privacy and security of the handled data.

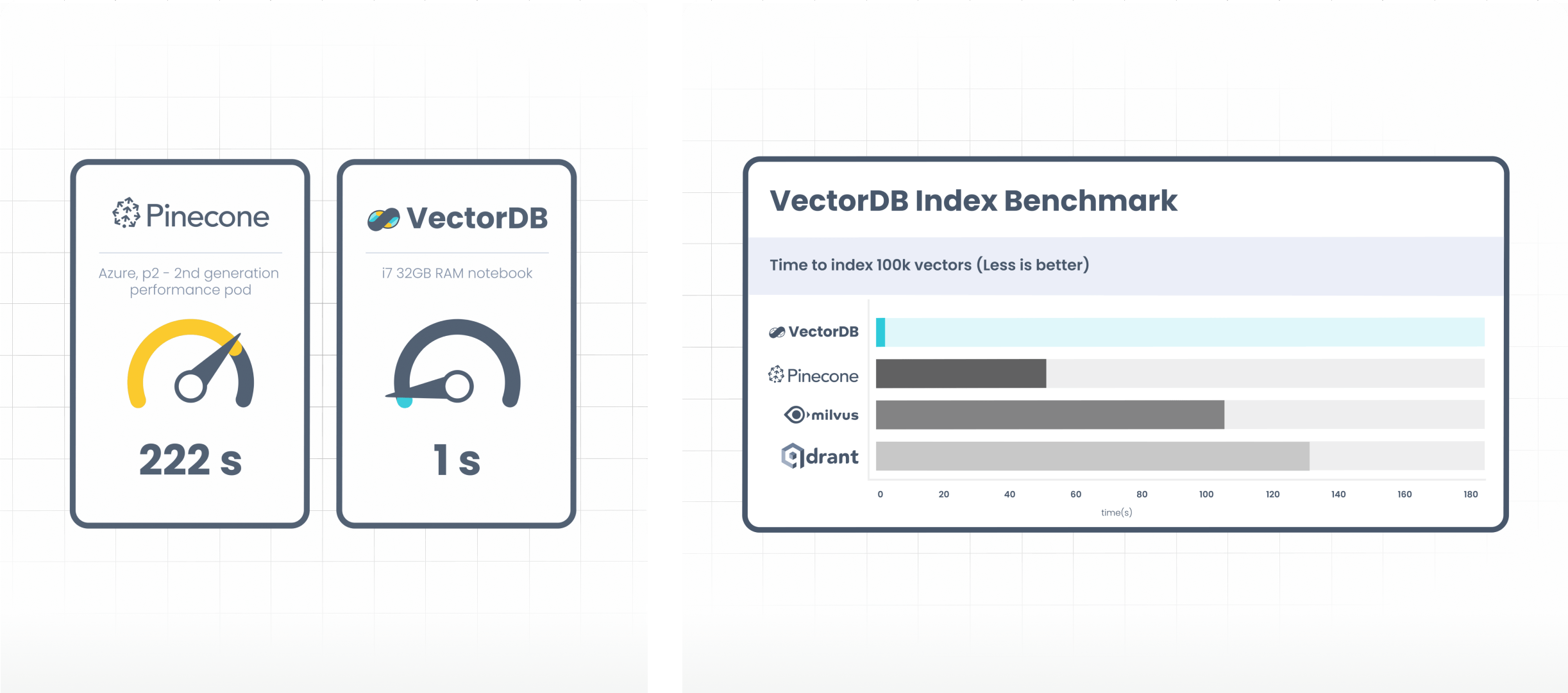

Shapelets VectorDBi is an optimal solution for RAG systems. It supports any vector dimensionality and works with any LLM. It stands out in efficiency, allowing rapid ingestion, indexing, storage, and retrieval of vector data at scale.

It surpasses most vector databases with minimal infrastructure. Take advantage of this opportunity to reduce your TCO by 50%. Double your vector data ingestion rates without the need to scale your infrastructure and enjoy vector indexing accelerations of up to 200 times compared to other market solutions.

Conclusion

In summary, Retrieval-Augmented Generation, supported by vector databases like Shapelets Vector DB, represents a significant evolution in the field of generative artificial intelligence. By providing access to up-to-date information and enhancing the ability of LLMs to generate contextualized responses, Shapelets Vector DB ensures greater efficiency, security, and accuracy, making RAG systems an essential tool for organizations seeking to stay competitive and optimize their decision-making processes.

Want to apply Shapelets to your projects?

Contact us and let’s study your case.